Knowledge Technologies and the Asymmetric Threat

Paul S. Prueitt, PhD

Research Professor

Cyber Security Policy & Research Center

George Washington University

3/16/03

Notes

Note on Artificial Intelligence

Note on Language and Linguistics

Knowledge Technologies and the Asymmetric Threat

New methodology will soon allow the continuous measurement of world wide social discourse. The measurement can be both transparent and have built-in protection of individual privacy. Web spiders provide the instrumentation for this measurement. Natural language processing and machine-ontology construction provides a representation of social discourse.

Two different levels of organization to the information facilitate the built-in protection for privacy. At one level is a stream of data, most of which is processed into abstractions about invariances in linguistic variation. At the other level is an archive of invariance types and compositional rules related to how grammar is used actually observed in the construction of meaning. Between this grammatical layer and the data stream can be placed Constitutional restrictions that require judicial review before individual data elements are investigated.

Put simply, linguistic and social scientists develop a real time and evolving model of how languages are used to express intention, but the measurement of the social discourse cannot be used indiscriminately to investigate the actions and behavior of individuals, without court supervision.

General systems properties and social issues involved in the adoption of this methodology are outlined in this paper. Technical issues related to logic, mathematics and natural science are touched on briefly. A full treatment requires an extensive background in mathematics, logic, computer theory and human factors. This technical treatment is open and public and is being published by a not for profit science association, the BCNGroup.org, and by OntologyStream.com.

Community building and community transformation have always involved complex processes that are instantiated from the interactions of humans in the form of social discourse. Given unfortunate cultural conditions, these processes support the development of asymmetric threats.

Community building and transformation are involved in responding to asymmetric threats. But informational transparency is needed to facilitate the social response to the causes of these threats.

Knowledge management models involve components that are structured around lessons learned and lessons encoded into long-term educational processes. The educational processes must be legitimate if the knowledge of the structure of social discourse is to have high fidelity. The coupling between measurement and positive action has to be public and transparent, not simply as a matter of public policy. Otherwise the fidelity of the information will be subject to narrow interpretation and, more often than not, to false sense making.

As a precursor to our present circumstance, for example, the Business Process Reengineering (BPR) methodologies provide for AS-IS models and TO-BE frameworks. But often these methodologies did not work well because the AS-IS model did not have high fidelity to the nature and causes of the enterprise. Over the past several decades, additional various knowledge management disciplines have been developed and taught.

In knowledge management practices there are deficits in scholarship and methodology that are due to a type of shallowness and to the intellectual requirements imposed on understanding issues related to a stratification and encapsulazation of individual and social intention. The shallowness of the discipline of “knowledge management” is rooted in economic fundamentalism.

The war calls for a new maturity on issues of viewpoint and truth finding and the rejection of all forms of fundamentalism. This maturity is needed because our response to terrorism’s fundamentalism has had a tendency to engage forms of ideological fundamentalism within our own society. American has a strong multi-cultural identity, as well as a treasured political renewal mechanism. When challenged by fundamentalism we rise to the challenge. In this case, we are called on to reinforce multi-culturalism.

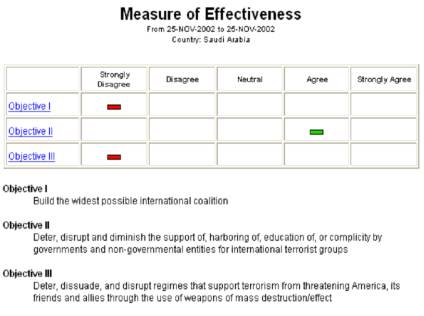

Figure 1: Experimental system producing polling like output

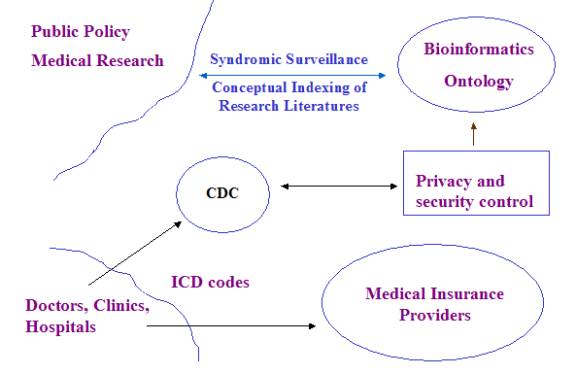

One can make the argument that something is missing from those technologies that are being acquired for intelligence vetting within the military defense system. Clearly biodefense information awareness requires much more that what DARPA’s Total Information Awareness (TIA) programs contemplates. Because Biodefense must interface with medical science, Biodefense Information Awareness (BIA) systems also requires something different, more open to initial scholarly review of what is planned and more open to commercialization when implemented.

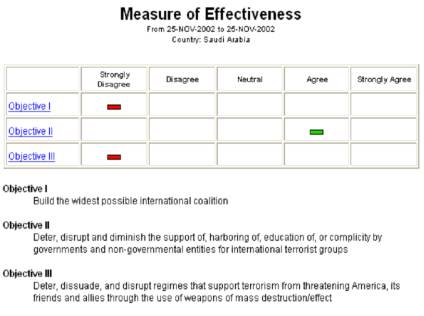

Figure 2: Proposed Biodefense Information Awareness

The complex interior of the individual human is largely unaccounted for in first attempts at measuring the thematic structure of social discourse. But the individual is where demand for social reality has its primary origin. So what is missing is the understanding of the complexity of social discourse.

What is missing is the available science on social expression and individual experience. Funding is not applied, as yet, to this science because of the narrowness of the current procurement processes that, not surprisingly, are focused on near term issues related to continuing funding for corporate incumbents. There is then, a bootstrap that is needed to shift the focus from methodology and philosophy that is not sophisticated and is not accounting for social complexity.

Why individual variation in response patterns, for example, is not being accommodated by commercial information technology is due to many factors. Some of these are technical issues related to limitations in design agility. Many of these issues are related to the commercialization of information production and use. But the deepest problems are related to the nature of formal systems.

These problems have not been settled in the academia, and thus there is no clear guidance coming from the academia. But the issues have been laid out within a scholarly literature.

Scholarship informs us that natural language is NOT a formal system. One would suspect that even children already know this about natural language. Perhaps the problem is in our cultural understanding of mathematics and science.

Yes, abstraction is used in spoken language; but a reliance on pointing and non-verbal expression helps to bring the interpretation of meaning within a social discussion, as it occurs and is experienced. So the abstraction involved in language is grounded in circumstances. These circumstances are experienced as part of the process of living. The experience relies on one’s being in the world as a living system with awareness of self. This experience is not an abstraction. Again, even children already understand this.

One can reflect on the fact that natural circumstances and the technical representations, by computer programs, of context are NOT the same. Many scholars have come to believe that circumstance is not something that can be fully formalized. But the confusion within the academia is profound.

Written language extends a capability to point at the non-abstract, using language signs, to what is NOT said but is experienced. Human social interaction has evolved to support the level of understanding that is needed for living humans, within culture, to form social constructs. But computer based information systems have so far failed to fully represent human tacit knowledge, even though computer networks now support billons of individual human communicative acts, per day, via e-mail and collaborative environments.

So, one observes a mismatch between human social interaction and computers.

How is the mismatch to be understood?

We suggest that the problem is properly understood in the light of a specific form of complexity theory.

An evolution of natural science is moving in the direction of a stratification of formal systems using complexity theory. A number of open questions face this evolution, including the re-resolution of notions of non-finite, the notion of an axiom, and the development of the understanding of human induction. Induction, in counter position to deduction, is seen as a means to "step away from" the formal system and observe the real world directly.

It is via this modification of constructs lying within the foundations of logic and mathematics that an extension of the field of mathematics opens the way to a new computer science.

The “artificial intelligence” failure can be viewed, and often is, as simply because humans have not yet understood how to develop the right types of computer programs. This viewpoint is an important viewpoint that has lead to interesting work on computer representation of human and social knowledge.

But put quite simply, a representation of knowledge is an abstraction and does not have the physical nature required to be an “experience” of knowledge.

The fact that humans experience knowledge so easily may lead us to expect that knowledge can be experienced by an abstraction. And we may even forget that the computer program, running on hardware, is doing what it is doing based on a machine reproduction of abstract states. These machine states are Markovian, a mere mathematical formalism. By this, we mean that the states have no dependency on the past or the future; except as specified in the abstractions that the state is an instantiation of.

There is no dependency on the laws of physics either, except as encoded into other abstractions.

This fact separates computer science and natural science.

The tri-level architecture models the relationship between memory of the past, and awareness of the present, and the anticipation of the future. However, once this machine architecture is in place, we still will be working with abstraction and not a physical realization of (human) memory or anticipation.

Stratification seems to matter, and may help on issues of consistency and completeness, the Godel issues in formal foundations to logic. The clean separation of memory functions and anticipatory functions allows one to bring the experimental neuroscience and the cognitive science into play.

The measurement of the physical world results in abstraction. The measurement of invariance produces a finite class of categorical Abstraction (cA), which we call cA atoms. cA atoms have relationships that are expressed together in patterns and these patterns are then expressed in correspondence to some aspects of the measured events. The cA atoms are the building blocks of events, or at least the abstract class that can be developed by looking at many instances of events of various types.

Anticipation is then regarded as expressed in event Chemistries (eC) and these chemistries are encoded in a quite different type of abstraction similar in nature to natural language grammar.

We are arguing that the development of categorical abstraction and the viewing of abstract models of social events, called “event chemistry”, are essential to national security. We are arguing that the current procurement process is not looking at and is not nurturing the types of science that is needed in this case.

Response mechanisms to these threats must start with proper and clear intelligence about event structures expressed in the social world. Human sharing of tacit knowledge must lie at the foundation of these response mechanisms.

But transparent human knowledge sharing is not part of the culture within the intelligence communities. Moreover, the current computer science, with its artificial intelligence and machine based first order predicate logic is confused about the nature of natural complexity. Current computer science talks about a “formal complexity” because natural complexity has a nature that is not addressable as an abstraction expressible as first order predicate logic. Formal complexity is just overly complicated, and it is complicated because of the incorrectness that is imposed by the tacit assumption that the world is an abstraction.

Putting artificial intelligence in context is vital if we are to push the cognitive load back onto human specialists where both cognition and perception can guide the sense making activity. Computer science has made many positive contributions within the context of a myth based on a strong form of scientific reductionism. This myth is that the real world can be reduced, in every aspect, to the abstraction that is the formal system that computer science is instantiating as a computer program. Natural science is clear is rejecting this myth.

Understanding the difference between computer-mediated knowledge exchanges and human discourse in the “natural” setting is critically important. One of our challenges is due to advances in warfare capabilities, including the existence of weapons of mass destruction. Another obvious challenge is due to the existence of the Internet and other forms of communication systems. Economic globalization and the distribution of goods and services presents yet another set of challenges. If the world social system is to be healthy, it is necessary that these security issues be managed.

We have no choice but to develop a transparency about the environmental, genetic, economic and social processes. Human technology is now simply too powerful and too intrusive to allow simple economic and social processes to exercise fundamentalism in various and separate ways.

Event models must be derived from the data mining of global social discourse. But the science to do this has NOT been developed as yet. We must define a science that has deep roots in legal theory, category theory, logic, and the natural sciences.

A secrete government project is not a proper response to challenges in science. In any case, the American democracy is resilient enough to conduct proper science and to develop the knowledge technologies in the public view. The BCNGroup.org is calling for a Manhattan-type project to establish the academic foundation for the knowledge sciences.

The current natural security requirements demand that this science be synthesized quickly from the available scholarship. Within this new science, stratified logics will compute event abstractions, at one scale of observation and event atom abstractions at a second scale of observation. The atom abstractions are themselves to be derived from polling and data mining processes in order to create the abstractions.

Again, we stress that the science needed has not been developed. But there is a wealth of scholarship that can be integrated quickly if only there was a small effort.

A stratification of information can be made into two layers of analysis.

The first layer is the set of individual polling results or the individual text placed into social discourse. In real time, and as trended over time, categorical abstraction is developed based on the repeated patterns within word structure. Polling methodology and machine learning algorithms are used.

The second layer is a derived aggregation of patterns that are analyzed to infer the behavior of social collectives and to represent the thematic structure of opinions. Drilling down into the specific information about, or from, specific individuals will require government analysts to make a conscious step and thus the very act of drilling down from the abstract layer to the specific informational layer is an enforceable legal barrier that stands in protection of Constitutional Rights.

New science/technology is needed to “see” events that lead to or support terrorism. Data mining is a start, as are the pattern recognition systems that have been developed. But we also need data synthesis into information, and a reification process that knows the importance of human-in-the-loop perception and feedback.

To control the computer mining and synthesis processes, we need something that stands in for natural language. Linguistic theory tells us that language use is not reducible to the algorithms expressed in computer science. But if “computers” are to be a mediator of social discourse, must not the type of knowledge representation be more structured than human language? What can we do?

The issues of knowledge evocation and encoding of knowledge representation shape the most critical inquiries.

According to our viewpoint, the computer does not, and cannot, have tacit knowledge to disambiguate natural language, in spite of several decades of effort to create knowledge technologies that have “common sense”. Based on principled argument a community of natural scientists has argued that the computer will not have tacit knowledge; ever.

Tacit knowledge is something experienced by humans. There is no known way to fully and completely encode tacit knowledge into rigidly structured standard relational databases.

A work around for this quandary is suggested in terms of a Differential Ontology Framework (DOF) that has an open loop architecture showing critical dependency on human sensory and cognitive acuity. An Appendix to this paper discusses the DOF.

The war presents daunting challenges. Asymmetric threats are organizing distributed communities to attack the vulnerabilities of our economic system.

A large number of social organizations have organically developed around the economic value of one to many communications systems. Developing agility and fidelity to our information systems is the strongest defense against these asymmetric threats. The differential ontology framework may enable processes, which have one to many structural coupling, to make a transition to a many to many technology.

The asymmetric threat is using many to one activity, loosely organized by the hijacking of Islam for private hatred and grief. The defense to this threat is the development of many to many communication systems.

The many to many technologies allow relief from the stealth that asymmetric threats are depending on. The relief comes when machine ontology is used as a means to represent, in the abstract, the social discourse. This representation can be done via the development and algorithmic interaction of human structured knowledge artifacts.

The evolution of user structured knowledge artifacts in knowledge ecosystems must be validated by community perception of that structure. In this way the interests of communities is established through a private to public vetting of perception. Without protection for privacy built in the technology, and protected by law, this validation cannot be successful and the technology will fail to have fidelity to what is actually the true structure of social discourse.

Knowledge validation occurs as private tacit knowledge becomes public. The relief from the asymmetric threat evolves because community structure is facilitated. The validation of artifacts leads to structured community knowledge production processes and these processes differentiate into economic processes. These benefits can be allowed to accrue to communities who have now, or might have in the future, a tendency to support terrorism based on a sense of injustice. A careful dance over the issues of privacy and justice is required. Global repression of all communities that feel injustice is not consistent with the strength of the American people. Our strength is in our multi-culturalism and our Constitution.

Individual humans, small coherent social units, and business ecosystems are all properly regarded as complex systems embedded in other complex systems. Understanding how events unfold in this environment has not been easy. But the war requires that science devote attention to standing up information production systems that are transparency and are many to many.

Relational databases and artificial intelligence has been a good first effort, but more is demanded. Schema independent data representation is required to capture the salient information within implicit ontologies.

The current standards often ignores certain difficult aspects of the complex environment and attempts to:

1) Navigate between models and the perceived ideal system state, or

2) Construct models with an anticipation of process engineering and change management bridging the difference between the model and reality.

The new knowledge science changes this dynamic by allowing individuals to add and subtract from a common knowledge base composed of topic / question hierarchies supported within the differential ontology framework.

This technology can become transparent because information technology has matured and been refined.

We will close by addressing a specific conceptual knot. We will untie this knot by separating issues related to natural language use.

Note on Language and Linguistics

Language and linguistics are relevant to our work for three reasons.

First, the new knowledge technologies are an extension to natural spoken languages. The technology reveals itself within a community as a new form of social communication.

Second, we are achieving the establishment of knowledge ecosystems using peer-to-peer ontology streaming. Natural language and the ontologies serve a similar purpose. However the ontologies are specialized around virtual communities existing within an Internet culture. Thus ontology streaming represents an extension of the phenomenon of naturally occurring language.

Third, the terminology used in various disciplines is often not adequate for interdisciplinary discussion. Thus we reach into certain schools of science, into economic theory and into business practices to find bridges between these disciplines. This work on interdisciplinary terminology is kept in the background, as there are many difficult challenges that remain not properly addressed. To assist in understanding this issue, general systems theory is useful.

These issues are in a context. Within this context, we make a distinction between computer computation, language systems, and human knowledge events. The distinction opens the door to certain deep theories about the nature of human thought.

Within existing scholarly literatures one can ground a formal notation defining data structures that store and allow the manipulation of topical taxonomies and related resources existing within the knowledge base.

The differential ontology framework consists of knowledge units and auxiliary resources used in report generation and trending analysis. The new knowledge science specifically recognizes that the human mind binds together topics of a knowledge unit. The new knowledge science holds that the computer cannot do this binding for us. The knowledge science reflects this reality. The rules of how cognitive binding occurs are not captured into the data structure of the knowledge unit, as this is regarded as counter to the differential ontology framework. The human remains central to all knowledge events, and the relationship that a human has with his or her environment is taken into account. The individual human matters, always.